$33 Billion Says Distributed Deployment Is No Longer Optional

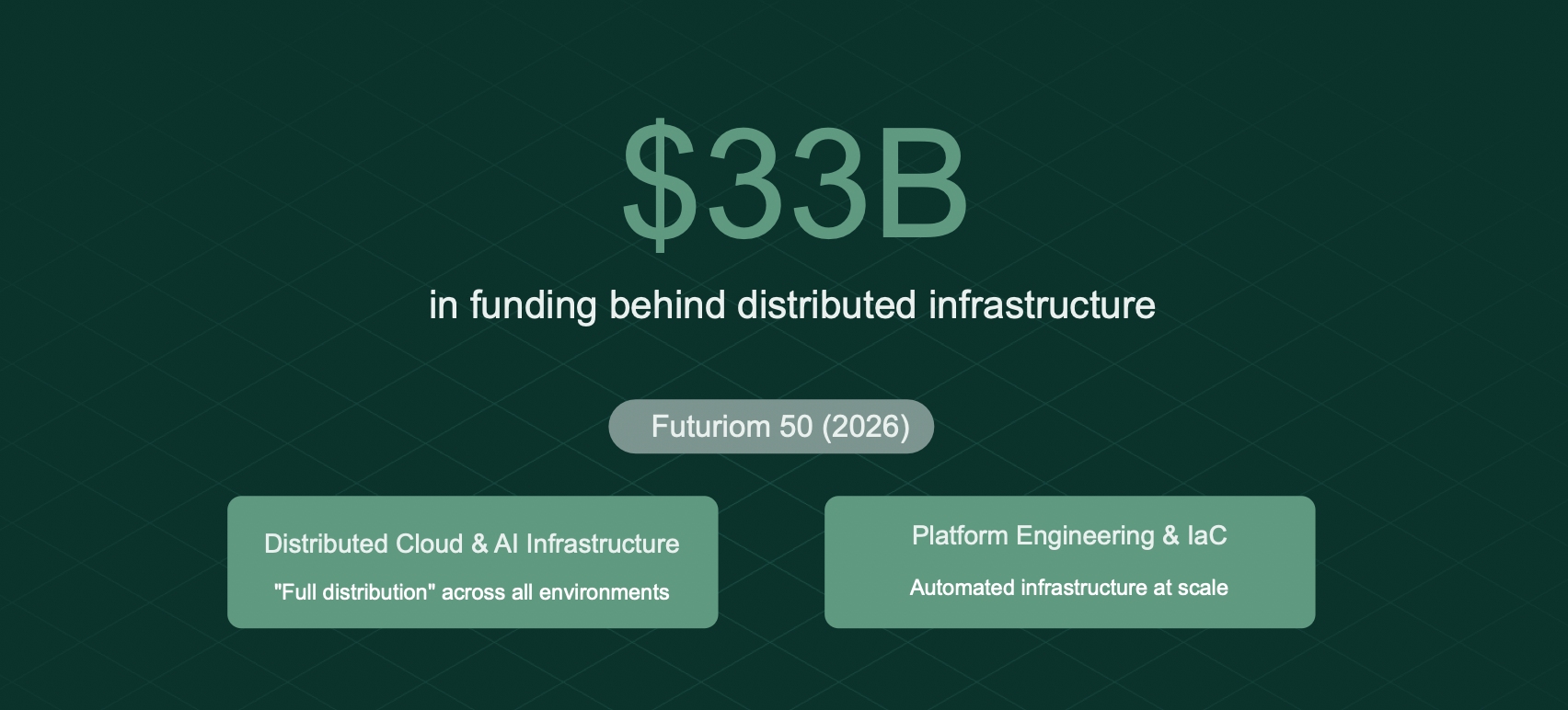

For years, “on-prem” felt like a legacy problem. It was something enterprises would eventually outgrow, something vendors could safely defer to a future roadmap. The 2026 Futuriom 50 report puts $33 billion behind the opposite conclusion.

Distributed deployment (software that runs in customer-controlled environments) is no longer a niche requirement. The market is moving in this direction, and the velocity is increasing.

The Market Proof

The Futuriom 50 tracks 50 private companies reshaping cloud infrastructure. This year, a few trends dominate.

Trend #1: Distributed AI Infrastructure. AI workloads are forcing “full distribution,” meaning software needs to run across customer clouds, private infrastructure, and edge environments simultaneously. Centralized compute cannot keep up with the latency and throughput demands that modern AI requires. The infrastructure has to go where the data lives.

Trend #4: Platform Engineering and IaC. The growth of hybrid platforms and applications means teams need a more automated way to connect infrastructure. Manual configuration does not scale when you’re deploying across multiple clouds, customer VPCs, and on-prem environments. Infrastructure as Code has become the foundation.

Across these 50 companies, there is $33 billion in combined funding. All of it is betting on the same direction: enterprises need software that deploys consistently across any environment, managed entirely through code.

What We See Every Week

We work with software vendors navigating this shift, and the pattern has become remarkably consistent.

A vendor builds on AWS (or another hyperscaler) using RDS, ElastiCache, EKS, and other managed services that let them move fast. The architecture is clean, the product works well, and customers are happy. Then an enterprise deal shows up with real revenue attached, but security review comes back with a familiar requirement: “Deploy in our environment. Our VPC. Our controls. Non-negotiable.”

We’ve seen this play out dozens of times across different industries. AI companies discover that their customers won’t let training data leave their own infrastructure. Healthcare prospects cite HIPAA requirements that demand on-premise deployment. Financial services firms require SOC 2 controls they manage themselves. Government buyers operate air-gapped networks where external connectivity is not an option.

The Real Cost of Waiting

Vendors who try to retrofit deployment flexibility after the fact end up paying for it in ways that go beyond engineering hours.

We have watched companies spend 12 to 18 months building a second deployment model. They pull engineers off the core product to work on infrastructure. They end up maintaining two codebases that inevitably diverge. The customer-deployed version falls behind the cloud version. Features ship slower across the board, and the entire organization feels the drag.

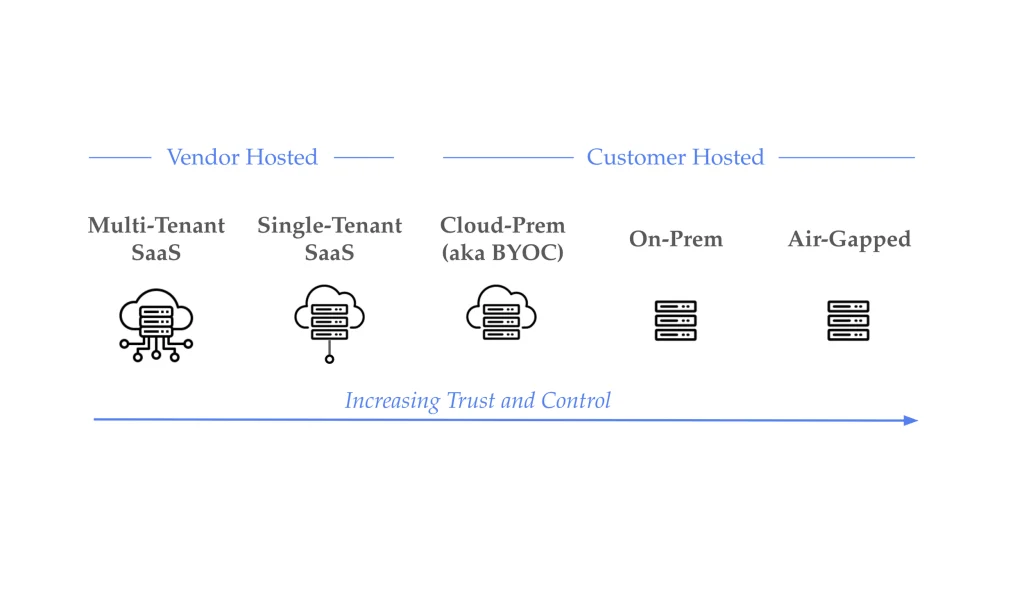

This Isn’t Just About “On-Prem”

The framing of “on-prem vs. cloud” misses the point. The real question is whether your software can meet customers where they are.

Sometimes that means their AWS account instead of yours. Sometimes it is a Kubernetes cluster they manage themselves. Sometimes it is an air-gapped environment with no external connectivity at all.

Trend #4 makes the path clear: the only way to achieve this at scale is through Infrastructure as Code. You cannot manually configure your way to multi-environment deployment. You need infrastructure that is defined once and deployable anywhere, which is exactly what platform engineering teams have been building toward.

What This Means

The Futuriom 50 is not a prediction about where the market might go. It is documentation of where capital is already flowing. The enterprises writing those checks have made their requirements clear, and vendors need to decide how they’re going to respond.

For software vendors, this translates into a straightforward decision: prepare now for customer-controlled deployment, or risk losing deals and momentum when the requirement shows up.

Concretely, that means:

- Auditing your architecture for cloud-provider dependencies that would block customer-environment deployment

- Identifying which managed services need portable equivalents (RDS to PostgreSQL, ElastiCache to Redis or Valkey, and so on)

- Ensuring your infrastructure is defined in code using Terraform, Pulumi, or similar tools, rather than click-ops or tribal knowledge

- Building deployment flexibility before the first enterprise customer asks for it, not after

The vendors who are doing this work now are closing enterprise deals their competitors can’t touch. When security review comes back with deployment requirements, they’re ready to respond instead of scrambling.

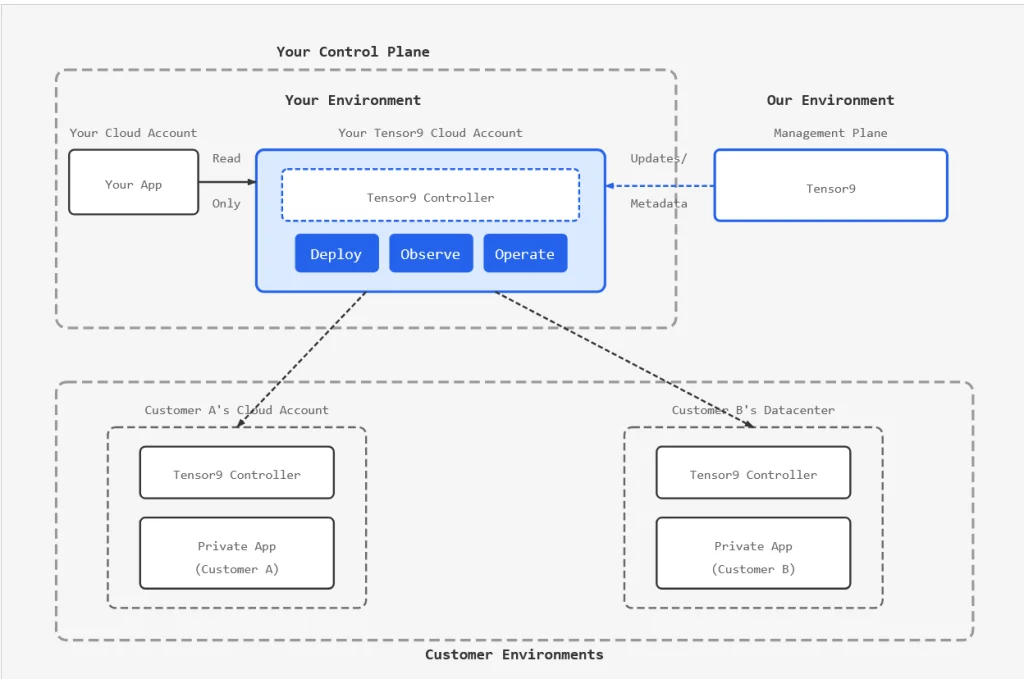

What We Built at Tensor9

We built Tensor9 because we kept seeing the same problem: vendors with excellent products were losing deals simply because they could not deploy into customer environments.

Our approach is straightforward. We take your existing infrastructure (your Terraform, your AWS stack) and compile it into an appliance that deploys into customer-controlled environments, whether that’s the customer’s AWS account, their GCP project, or their on-prem infrastructure.

You do not have to re-architect your entire stack:

Same stack, different target: We translate your managed services (RDS, ElastiCache, S3) into portable equivalents that work in whatever environment the customer requires. You keep building on AWS with the services you know. We handle the portability layer.

Observability orchestration: Logs, metrics, and traces sync back to you from customer environments, which means you can debug and support customers without needing direct access to their infrastructure.

Continuous updates: The system integrates with your existing CI/CD pipeline, so you can push updates across customer deployments the same way you ship to your own cloud.

Remote operations: Both synchronous and async operations let you support customers using the workflows you’re already comfortable with.

The Window Is Now

Every quarter, more enterprise buyers add “customer-controlled deployment” to their requirements list. AI workloads are accelerating this trend. Security concerns are reinforcing it. And $33 billion in infrastructure funding is actively chasing it.

Prepare early for customer-controlled environments, or risk losing momentum and revenue when it matters most.

Ready to bring your AI or SaaS product to customer environments?

Contact Tensor9 to learn how we can help you reach new markets without rebuilding your stack.

We offer a focused 45 minute assessment to help teams:

- Map your current model

- Uncover risks and operational bottlenecks

- Identify a streamlined path to delivering in enterprise customer environments without custom installs or operational sprawl